1. http://www.hiredintech.com/system-design/scalability-fundamentals/

http://massivetechinterview.blogspot.in/2015/09/scalability-principles.html

David Malan Harvard: https://www.youtube.com/watch?v=zlTVcNxg38c

Vertical scaling

Horizontal scaling

Caching

Load balancing

Database replication

Database partitioning

2. Scalability in Distributed Systems

1. http://book.mixu.net/distsys/ebook.html#intro

2. https://xmruibi.gitbooks.io/interview-preparationnotes/content/SystemDesign/DistributedSystem.html

3. Storage/Database Scalability

3.1. Databse Shardinghttps://www.interviewbit.com/problems/sharding-a-database/

Let's design a sharding scheme for key-value storage.

Understanding System:

Features:

Features:

This is the first part of any system

design interview, coming up with the features which the system should support.

As an interviewee, you should try to list down all the features you can think

of which our system should support. Try to spend around 2 minutes for this

section in the interview. You can use the notes section alongside to remember

what you wrote.

- Q: What is

the amount of data that we need to store?

A: Let's assume a few 100 TB. - Q: Will

the data keep growing over time? If yes, then at what rate?

A: Yes. At the rate of 1TB per day. - Q: Can we

make assumptions about the storage of machines available with me?

A: Let's assume that machines have a RAM of 72G and a hard disk capacity of 10TB. - Q: How

many machines do I have to begin with?

A: Let's assume we have 20 machines to begin with. More machines will be available on request if need be. - Q: Are all

key value entries independent?

A: Yes. A typical query would ask for value corresponding to a key.

Estimation:

This is usually the second part of a design interview, coming up

with the estimated numbers of how scalable our system should be. Important

parameters to remember for this section is the number of queries per second and

the data which the system will be required to handle.

Try to spend around 5 minutes for this section in the interview.

Try to spend around 5 minutes for this section in the interview.

Total storage size : 100 TB as estimated earlier

Storage with every machine : 10TB

Q: What is the minimum number of machines required to store the data?

Storage with every machine : 10TB

Q: What is the minimum number of machines required to store the data?

A: Assuming a machine has 10TB of hard disk, we would

need minimum of 100TB / 10 TB = 10 machines to store the said data. Do note

that this is bare minimum. The actual number might be higher.

In this case, we have 20 machines at our disposal.

In this case, we have 20 machines at our disposal.

Q: How frequently would we need to add machines to

our pool ?

A: The data grows at 1TB per day. That means that we

generate data that would fill the storage of 1 machine ( 10TB ) in 10 days.

Assuming, we want to keep a storage utilization of less than 80%, we would need

to add a new machine every 8 days.

Deep Dive:

Lets dig deeper into every component one by one. Discussion for

this section will take majority of the interview time(20-30 minutes).

Note : In questions like these, the interviewer is looking

at how you approach designing a solution. So, saying that I’ll use a

distributed file system like HDFS is not a valid response. It's okay to discuss

the architecture of HDFS with details around how HDFS handles various scenarios

internally.

Q: Can we have a fixed number of shards?

A: One qualification for a shard is that the data

within a shard should fit on a single machine completely.

As in our case, the data is growing at a fast pace, if we have a fixed number of shards, data within a shard will keep growing and exceed the 10TB mark we have set per machine. Hence, we cannot have a fixed number of shards. The shards will have to increase with time.

As in our case, the data is growing at a fast pace, if we have a fixed number of shards, data within a shard will keep growing and exceed the 10TB mark we have set per machine. Hence, we cannot have a fixed number of shards. The shards will have to increase with time.

Q: How many shards do we have and how do we

distribute the data within the shard?

A: Lets say our number of shards is S. One way to

shard is that for every key, we calculate a numeric hash H, and assign the key

to the shard corresponding to H % S.

There is one problem here though. As we discussed earlier, the number of shards will have to increase. And when it does, our new number of shard becomes S+1.

As, such H%(S+1) changes for every single key causing us to relocate each and every key in our data store. This is extremely expensive and highly undesirable.

There is one problem here though. As we discussed earlier, the number of shards will have to increase. And when it does, our new number of shard becomes S+1.

As, such H%(S+1) changes for every single key causing us to relocate each and every key in our data store. This is extremely expensive and highly undesirable.

Q: Can we think of a better sharding strategy?

Hint: Consistent Hashing.

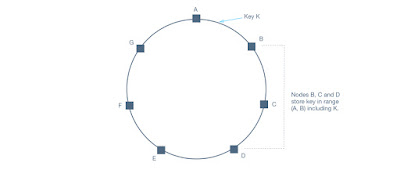

A: Consistent hashing is ideal for the situation

described here. Lets explore consistent hashing here.

Let's say we calculate a 64 bit integer hash for every key and map it to a ring. Lets say we start with X shards. Each shard is assigned a position on the ring as well. Each key maps to the first shard on the ring in clockwise direction.

Let's say we calculate a 64 bit integer hash for every key and map it to a ring. Lets say we start with X shards. Each shard is assigned a position on the ring as well. Each key maps to the first shard on the ring in clockwise direction.

What happens if we need to add another shard ? Or what if

one of the shard goes down and we need to re-distribute the data among

remaining shards?

Similarily, there is a problem of cascading failure when a

shard goes down.

Modified consistent hashing

What if we slightly changed the ring so that instead of one copy per shard, now we have multiple copies of the same shard spread over the ring.

What if we slightly changed the ring so that instead of one copy per shard, now we have multiple copies of the same shard spread over the ring.

Case when new shard is added :

Case when a shard goes down : No cascading failure. Yay!

3.2 Highly Available database:

https://www.interviewbit.com/problems/highly-available-database/

Features:

This is the first part of any system

design interview, coming up with the features which the system should support.

As an interviewee, you should try to list down all the features you can think

of which our system should support. Try to spend around 2 minutes for this

section in the interview. You can use the notes section alongside to remember

what you wrote.

- Q: What is

the amount of data that we need to store?

A: Let's assume a few 100 TB. - Q: Do we

need to support updates?

A: Yes. - Q: Can the

size of the value for a key increase with updates?

A: Yes. In other words, its possible a sequence of keys could co-exist on one server previously, but with time, they grew to a size where all of them don't fit on a single machine. - Q: Can a

value be so big that it does not fit on a single machine?

A: No. Let's assume that there is an upper cap of 1GB to the size of the value. - Q: What

would the estimated QPS be for this DB?

A: Let's assume around 100k.

Estimation:

This is usually the second part of a design interview, coming up

with the estimated numbers of how scalable our system should be. Important

parameters to remember for this section is the number of queries per second and

the data which the system will be required to handle.

Try to spend around 5 minutes for this section in the interview.

Try to spend around 5 minutes for this section in the interview.

Total storage size : 100 TB as estimated earlier

Total estimated QPS : Around 100k

Q: What is the minimum number of machines required to store the data?

Total estimated QPS : Around 100k

Q: What is the minimum number of machines required to store the data?

A: Assuming a machine has 10TB of hard disk, we would

need minimum of 100TB / 10 TB = 10 machines to store the said data. Do note

that this is bare minimum. The actual number might be higher if we decide to

have replication or more machines incase we need more shards to lower the QPS

load on every shard.

Design

Goals:

·

Latency - Is this problem very latency sensitive (Or in other

words, Are requests with high latency and a failing request, equally bad?). For

example, search typeahead suggestions are useless if they take more than a

second.

·

Consistency - Does this problem require tight consistency? Or is it

okay if things are eventually consistent?

·

Availability - Does this problem require 100% availability?

There could be more goals depending on the problem. It's

possible that all parameters might be important, and some of them might

conflict. In that case, you’d need to prioritize one over the other.

Q: Is Latency a very important metric for us?

A: Since we want to be available all the time, we should try to

have lower latency.

Q: Consistency vs Availability?

A: As the question states, we need good availability and

partition tolerance.

Going by the CAP theorem ( Nicely explained at http://robertgreiner.com/2014/08/cap-theorem-revisited/ ), we would need to compromise with consistency if we have availability and partition tolerance.

We can however aim at having eventual consistency. As is the case with any storage system, data loss is not acceptable.

Going by the CAP theorem ( Nicely explained at http://robertgreiner.com/2014/08/cap-theorem-revisited/ ), we would need to compromise with consistency if we have availability and partition tolerance.

We can however aim at having eventual consistency. As is the case with any storage system, data loss is not acceptable.

Deep Dive:

Lets dig deeper into every component one by one. Discussion for

this section will take majority of the interview time(20-30 minutes).

Note : In questions like these, the interviewer is looking

at how you approach designing a solution. So, saying that I’ll use a NoSQL DB

like Cassandra is not an ideal answer. It is okay to discuss the architecture

of Cassandra for example with rationale around why some components were

designed the way they were..

Q: Is sharding required?

A: Lets look at our earlier estimate about the data

to be stored. 100TB of data can’t be stored on a single machine.

Lets say that we somehow have a really beefy machine which can store that amount of data, that machine would have to handle all of the queries ( All of the load ) which could lead to a significant performance hit.

Lets say that we somehow have a really beefy machine which can store that amount of data, that machine would have to handle all of the queries ( All of the load ) which could lead to a significant performance hit.

Tip: You could argue that there can be multiple copies of

the same machine, but this would not scale in the future. As my data grows, its

possible that I might not find a big beefy enough machine to fit my data.

So, the best course of action would be to shard the data and

distribute the load amongst multiple machines.

Q: Should the data stored be normalized?

(http://www.studytonight.com/dbms/database-normalization.php)

(http://www.studytonight.com/dbms/database-normalization.php)

A: If the data is normalized, then we need to join

across tables and across rows to fetch data. If the data is already sharded

across machine, any join across machines is highly undesirable ( High latency,

Less indexing support ).

With storing denormalized information however, we would be storing the same fields at more than one place. However, all information related to a row ( or a key ) would be on the same machine. This would lead to lower latency.

However, if the sharding criteria is not chosen properly, it could lead to consistency concerns ( After all, we are storing the same data at multiple places ). However, for this case, we are more concerned with availability and ready to compromise on consistency as long as things become eventually consistent.

In this case, it seems like having denormalized rows makes sharding easier for us and suits our use case better.

With storing denormalized information however, we would be storing the same fields at more than one place. However, all information related to a row ( or a key ) would be on the same machine. This would lead to lower latency.

However, if the sharding criteria is not chosen properly, it could lead to consistency concerns ( After all, we are storing the same data at multiple places ). However, for this case, we are more concerned with availability and ready to compromise on consistency as long as things become eventually consistent.

In this case, it seems like having denormalized rows makes sharding easier for us and suits our use case better.

Q: How many machines per shard ? How does a read /

write look in every shard?

A: Going back to our design goals, low latency and

high availability are our design goals.

Lets assume we have somehow sharded the rows into shards. Hence, lets first look at how the architecture might look at within a shard.

Lets assume we have somehow sharded the rows into shards. Hence, lets first look at how the architecture might look at within a shard.

Master Slave

One simple solution might be to have a master node in each

shard which has a slave node which reads all new updates from master and keeps

updating itself (The slave in this case might not have the same view as master

and would lag a little bit). Clients can read from either the master or the

slave depending on which responds earlier (or being slightly more intelligent

with the reads to give more preference to the master, and fallback to slave

after the read call to master). That could lead to inconsistent views on newer

entries across master and client, but would ensure high read availability.

However, what happens to writes when the master goes down. The writes start failing since only master was taking up the writes.

We can argue that we can have the slave become the new master in such a case. However, even that implies unavailability for the period of failover from master to the slave as new master.

Also, if the slave is lagging a bit, and then the master has a hardware failure, we run the risk of losing data.

This means that we definitely need more than one machine taking the write traffic if we are to be available all the time.

However, what happens to writes when the master goes down. The writes start failing since only master was taking up the writes.

We can argue that we can have the slave become the new master in such a case. However, even that implies unavailability for the period of failover from master to the slave as new master.

Also, if the slave is lagging a bit, and then the master has a hardware failure, we run the risk of losing data.

This means that we definitely need more than one machine taking the write traffic if we are to be available all the time.

Multi Master

Lets say we modify the previous design where both machines

accept write AND read traffic. Lets name the machine m1 and m2.

If m1 accepts write without depending on m2, then it is possible m1 is doing write on a row state which is not the same as m2. That could lead to huge consistency concerns and the DB might become forever inconsistent. DBs might get operations out of order and it can cause eventual inconsistency if the order of operation matters ( double the column value and then increment it vs increment the column value and then double it ).

From above examples we see that any system with a master node will not be highly available, therefore we move to peer to peer systems.

If m1 accepts write without depending on m2, then it is possible m1 is doing write on a row state which is not the same as m2. That could lead to huge consistency concerns and the DB might become forever inconsistent. DBs might get operations out of order and it can cause eventual inconsistency if the order of operation matters ( double the column value and then increment it vs increment the column value and then double it ).

From above examples we see that any system with a master node will not be highly available, therefore we move to peer to peer systems.

Q: Can a peer to peer system be highly available in

case of a DB machine dying?

Hint: Single point of failure!

A: We define a peer to peer system where every node

is equally privileged and any two nodes can communicate. Yes, since we don't

have a single point of failure anymore, therefore our system can theoretically

be available even in presence of dying DB machines. Dynamo and Cassandra are

examples of examples of such systems, both of them lack the master node

and therefore have no single point of failure. Our highly available datastore

will be highly based on Dynamo and Cassandra, as a reader you don't need to

know about them.

Q: How will we exactly shard data for a peer to peer

system?

A: Refer to https://www.interviewbit.com/problems/sharding-a-database/

for a detailed answer.

Q: How do we store redundant data?

A: We will need to store duplicate data to prevent data loss in

case of some of our DB machines getting corrupted. To store the data we can use

consistent hashing which assigns every data to a particular node on the ring.

Let's call P as our replication factor(we will store P copies of

data). Now for a data D, we have to choose P nodes where we will store

copies of D.

Now, how do we choose these P nodes? We will choose the P clockwise consecutive nodes starting from the node where D is mapped by our hashing function.

An important point to dicuss here is that even though any data might be mapped to a particular virtual node, the virtual node is not the master node for this data for either read or right. A client can request to read or write a data from any node they want. This is essential in creating a highly available system.

Now, how do we choose these P nodes? We will choose the P clockwise consecutive nodes starting from the node where D is mapped by our hashing function.

An important point to dicuss here is that even though any data might be mapped to a particular virtual node, the virtual node is not the master node for this data for either read or right. A client can request to read or write a data from any node they want. This is essential in creating a highly available system.

Q: How does a write/read happen in our system?

A:

Write request:

A client can contact any node in the ring with a put() request to write data, this node acts as a coordinating node for this request. Coordinating node then forwards the request to the mapping nodes for the data(hashing) and waits for acknowledgement from them. When it receives W(explained later) acknowledgements, it returns a write-accepted message to the client.

Read request:

To perform a get() request, client can connect to any node in the ring which becomes the coordinating node for this request. The coordinating node then asks all replica nodes for this data and returns consolidated data to the client when R of them replies back.

Read and Write consistency:

W and R are called write and read consistency number respectively. To recap, W is the minimum number of nodes from which the coordinating node should get an ack before making a write successful and R is the minimum number of nodes from which the coordinating node should get back read values to return them back to the client.

R, W together forms quorum of the system. For a read to be consistent(return the latest write), we need to keep W + R > P.

Depending on the feature requirement W and R can be adjusted, for example to have very fast writes we can keep W = 1 and R = P. If our system is read heavy we can keep R = 1 and W = P. If read and write are equally distributed, we can keep both R and W as (P+1)/2.

Write request:

A client can contact any node in the ring with a put() request to write data, this node acts as a coordinating node for this request. Coordinating node then forwards the request to the mapping nodes for the data(hashing) and waits for acknowledgement from them. When it receives W(explained later) acknowledgements, it returns a write-accepted message to the client.

Read request:

To perform a get() request, client can connect to any node in the ring which becomes the coordinating node for this request. The coordinating node then asks all replica nodes for this data and returns consolidated data to the client when R of them replies back.

Read and Write consistency:

W and R are called write and read consistency number respectively. To recap, W is the minimum number of nodes from which the coordinating node should get an ack before making a write successful and R is the minimum number of nodes from which the coordinating node should get back read values to return them back to the client.

R, W together forms quorum of the system. For a read to be consistent(return the latest write), we need to keep W + R > P.

Depending on the feature requirement W and R can be adjusted, for example to have very fast writes we can keep W = 1 and R = P. If our system is read heavy we can keep R = 1 and W = P. If read and write are equally distributed, we can keep both R and W as (P+1)/2.

Q: What if a machine goes down?

A: Since no node is only responsible for a piece of data, it's

going down won't really affect our writes/reads. As long as W out of P

nodes are available for some key, it can be updated(similarily for read).

Note that in case of less than W nodes available to write for some data, we can relax our write consistency(sacrificing data consistency for availability).

Note that in case of less than W nodes available to write for some data, we can relax our write consistency(sacrificing data consistency for availability).

Q: What kind of consistency can we provide?

A: If we keep W = P, we can provide strong consistency

but we won't be available for some writes even if one of our DB machine dies.

Earlier we saw in master-master configuration that in network partition cases, our masters may diverge to provide availability. In our current system, essentially all of our nodes are master and the point that they will diverge should be taken for granted and we should build our system considering it.

Therefore we should build for the case where W is less than P, hence our writes will be propagated i.e. some machines will have an updated view of data and some will not, therefore they are not consistent. The best we can guarentee here is eventual consistency, that is in due time, all the changes will be applied to every server and in the end they will all have a consistent view of the data.

To achieve eventual consistency, we need to be able to resolve differences between data on two servers. There are a couple of detect and resolve data conflicts that may arise.

First, if data(key, value) we store is such that value is just a single column, we can use a simple criteria of LWW(last write wins) to resolve conflicts. So if two servers have different view of a key, in the resolve step we can update the server with the stale with the new data and therefore become consistent.

The other way is to store augmented data for each row indicating all the coordinating nodes for the row till now. Now, to detect and understand conflict we can compare the augmented data. If one is a subset of the other(all the writes seen by one of the row has been seen by the other row) we can safely ignore the one with smaller augmented data. Otherwise, we have a conflit for our row and need application level logic to resolve it. This way is usually required when our value if composed of more than one independent column.

High availability Architechture

https://www.getfilecloud.com/blog/an-introduction-to-high-availability-architecture/

Earlier we saw in master-master configuration that in network partition cases, our masters may diverge to provide availability. In our current system, essentially all of our nodes are master and the point that they will diverge should be taken for granted and we should build our system considering it.

Therefore we should build for the case where W is less than P, hence our writes will be propagated i.e. some machines will have an updated view of data and some will not, therefore they are not consistent. The best we can guarentee here is eventual consistency, that is in due time, all the changes will be applied to every server and in the end they will all have a consistent view of the data.

To achieve eventual consistency, we need to be able to resolve differences between data on two servers. There are a couple of detect and resolve data conflicts that may arise.

First, if data(key, value) we store is such that value is just a single column, we can use a simple criteria of LWW(last write wins) to resolve conflicts. So if two servers have different view of a key, in the resolve step we can update the server with the stale with the new data and therefore become consistent.

The other way is to store augmented data for each row indicating all the coordinating nodes for the row till now. Now, to detect and understand conflict we can compare the augmented data. If one is a subset of the other(all the writes seen by one of the row has been seen by the other row) we can safely ignore the one with smaller augmented data. Otherwise, we have a conflit for our row and need application level logic to resolve it. This way is usually required when our value if composed of more than one independent column.

High availability Architechture

https://www.getfilecloud.com/blog/an-introduction-to-high-availability-architecture/

Design a distributed key value store which is highly consistent and is network partition tolerant.

Understanding System:

Features:

This is the first part of any system

design interview, coming up with the features which the system should support.

As an interviewee, you should try to list down all the features you can think

of which our system should support. Try to spend around 2 minutes for this

section in the interview. You can use the notes section alongside to remember

what you wrote.

- Q: What is

the amount of data that we need to store?

Anwer: Let's assume a few 100 TB. - Q: Do we

need to support updates?

A: Yes. - Q: Can the

size of the value for a key increase with updates?

A: Yes. In other words, its possible a sequence of keys could co-exist on one server previously, but with time, they grew to a size where all of them don't fit on a single machine. - Q: Can a

value be so big that it does not fit on a single machine?

A: No. Let's assume that there is an upper cap of 1GB to the size of the value. - Q: What

would the estimated QPS be for this DB?

A: Let's assume around 100k

Estimation:

This is usually the second part of a design interview, coming up

with the estimated numbers of how scalable our system should be. Important

parameters to remember for this section is the number of queries per second and

the data which the system will be required to handle.

Try to spend around 5 minutes for this section in the interview.

Try to spend around 5 minutes for this section in the interview.

Total storage size : 100 TB as estimated earlier

Total estimated QPS : Around 10M

Q: What is the minimum number of machines required to store the data?

Total estimated QPS : Around 10M

Q: What is the minimum number of machines required to store the data?

A: Assuming a machine has 10TB of hard disk, we would

need minimum of 100TB / 10 TB = 10 machines to store the said data. Do note

that this is bare minimum. The actual number might be higher if we decide to

have replication or more machines incase we need more shards to lower the QPS

load on every shard.

Design

Goals:

·

Latency - Is this problem very latency sensitive (Or in other

words, Are requests with high latency and a failing request, equally bad?). For

example, search typeahead suggestions are useless if they take more than a

second.

·

Consistency - Does this problem require tight consistency? Or is it

okay if things are eventually consistent?

·

Availability - Does this problem require 100% availability?

There could be more goals depending on the problem. It's

possible that all parameters might be important, and some of them might

conflict. In that case, you’d need to prioritize one over the other.

Q: Is Latency a very important metric for us?

A: No, but it would be good to have a lower latency.

Q: Consistency vs Availability?

A: As the question states, we need tight consistency and

partitioning. Going by the CAP theorem ( Nicely explained at http://robertgreiner.com/2014/08/cap-theorem-revisited/),

we would need to compromise with availability if we have tight consistency and

partitioning. As is the case with any storage system, data loss is not

acceptable.

Deep Dive:

Lets dig deeper into every component one by one. Discussion for

this section will take majority of the interview time(20-30 minutes).

Note : In questions like these, the interviewer is looking

at how you approach designing a solution. So, saying that I’ll use a NoSQL DB

like HBase is not an ideal answer. It is okay to discuss the architecture of

HBase for example with rationale around why some components were designed the

way they were.

Q: Is sharding required?

A: Lets look at our earlier estimate about the data

to be stored. 100TB of data can’t be stored on a single machine.

Let's say that we somehow have a really beefy machine which can store that amount of data, that machine would have to handle all of the queries ( All of the load ) which could lead to a significant performance hit.

Let's say that we somehow have a really beefy machine which can store that amount of data, that machine would have to handle all of the queries ( All of the load ) which could lead to a significant performance hit.

Tip: You could argue that there can be multiple copies of

the same machine, but this would not scale in the future. As my data grows, its

possible that I might not find a big beefy enough machine to fit my data.

So, the best course of action would be to shard the data and

distribute the load amongst multiple machines.

Q: Should the data stored be normalized?

http://www.studytonight.com/dbms/database-normalization.php

http://www.studytonight.com/dbms/database-normalization.php

Q: Can I shard the data so that all the data required

for answering my most frequent queries live on a single machine?

A: Most applications are built to store data for a

user ( consider messaging for example. Every user has his / her own mailbox ).

As such, if you shard based on every user as a row, its okay to store data in a

denormalized fashion so that you won’t have to query information across users.

In this case, lets say we go with storing data in denormalized fashion.

A: If the data is normalized, then we need to join

across tables and across rows to fetch data. If the data is already sharded

across machine, any join across machines is highly undesirable ( High latency,

Less indexing support ).

With storing denormalized information however, we would be storing the same fields at more than one place. However, all information related to a row ( or a key ) would be on the same machine. This would lead to lower latency.

However, if the sharding criteria is not chosen properly, it could lead to consistency concerns ( After all, we are storing the same data at multiple places ).

With storing denormalized information however, we would be storing the same fields at more than one place. However, all information related to a row ( or a key ) would be on the same machine. This would lead to lower latency.

However, if the sharding criteria is not chosen properly, it could lead to consistency concerns ( After all, we are storing the same data at multiple places ).

Q: How many machines per shard ? How does a read /

write look in every shard?

Q: Can we keep just one copy of data?

A: Since there is only one copy of the data, reading

it should be consistent. As long as there are enough shard to ensure a

reasonable load on each shard, latency should be acceptable as well. Reads and

writes would work exactly how they work with a single DB just that there would

be a row -> shard -> machine IP ( Given a row, tell me the shard it

belongs to and then given the shard, give me the machine I should be querying /

writing to ) resolution layer in between.

There is just one tiny problem with this model. What if the machine in the shard goes down? Our shard will be unavailable ( which is fine as governed by the CAP theorem ). However, what if the machine dies and its hard disk becomes corrupt. We suddenly run into the risk of losing the data which is not acceptable. Imagine losing all your messages because your shard went down and the hard disk got corrupted. That means we definitely need more than one copy of data being written with us.

There is just one tiny problem with this model. What if the machine in the shard goes down? Our shard will be unavailable ( which is fine as governed by the CAP theorem ). However, what if the machine dies and its hard disk becomes corrupt. We suddenly run into the risk of losing the data which is not acceptable. Imagine losing all your messages because your shard went down and the hard disk got corrupted. That means we definitely need more than one copy of data being written with us.

Q: What problem may arise if we keep multiple copies of data?

A: Let's say we keep 3 copies of data ( The probability of all

3 machines dying and having a corrupted disk is negligible ). Now, the issue is

how do we maintain all of the copies in absolute sync ( Consistency, remember?

).

One naive way would be that a write would not succeed unless its written to all 3 copies / machines. That would make our write latency go up significantly apart from making writes very unreliable ( My write fails if it fails on any of the machines ). Let's see if we can make this a bit better.

If we have to allow writes succeeding even if the write has been written on a majority of machines (2 out of 3, let's say), to maintain consistency, its important that there is a master machine which keeps track of this information. This master machine can track which machines have a particular block in each shard. This means that every read will go through this master machine, figure out the machines with the block and query from the required block. The machines which do not have the block can check with this master machine to see which block are not present on it, and catch up to replicate the block on it.

However, now if this master machine dies, our whole shard is unavailable till this machine comes back up. If this machine has a corrupted hard disk, then the unavailability becomes indefinite ( Note that we do not loose data in this case, as total data is the union of data present on 3 nodes ). This is not an ideal design, but we will talk about improvements to it later in this question.

One naive way would be that a write would not succeed unless its written to all 3 copies / machines. That would make our write latency go up significantly apart from making writes very unreliable ( My write fails if it fails on any of the machines ). Let's see if we can make this a bit better.

If we have to allow writes succeeding even if the write has been written on a majority of machines (2 out of 3, let's say), to maintain consistency, its important that there is a master machine which keeps track of this information. This master machine can track which machines have a particular block in each shard. This means that every read will go through this master machine, figure out the machines with the block and query from the required block. The machines which do not have the block can check with this master machine to see which block are not present on it, and catch up to replicate the block on it.

However, now if this master machine dies, our whole shard is unavailable till this machine comes back up. If this machine has a corrupted hard disk, then the unavailability becomes indefinite ( Note that we do not loose data in this case, as total data is the union of data present on 3 nodes ). This is not an ideal design, but we will talk about improvements to it later in this question.

Q: What if the master keeping track of where the blocks are

stored dies?

Anwer: To overcome this problem we keep a standby master which in

the failover process becomes the acting master and keeps unavilability to

minimum. Now, to keep the standby master upto date we can have a shared network

file system. When any namespace modification is performed by the Active master,

it durably logs a record of the modification to an edit log file stored in the

shared directory. The Standby node constantly watches this directory for edits,

and when edits occur, the Standby node applies them to its own namespace. In

the event of a failover, the Standby will ensure that it has read all of the

edits from the shared storage before promoting itself to the Active state. This

ensures that the namespace state is fully synchronized before a failover

occurs.

A: Going back to our design goals, latency and

consistency are our design goals.

A simple way to resolve this is to make sure we only have one machine per shard. Reads and writes would work exactly how they work with a single DB. However, if the machine holding the only copy dies and its hard disk becomes corrupt, we suddenly run into the risk of losing the data which is not acceptable. That means we definitely need more than one copy of data being written with us. Lets say that number is 3. Now, the issue is how do we maintain all of the copies in absolute sync ( Consistency, remember? ).

One naive way would be that a write would not succeed unless its written to all 3 copies / machines. That would make our write latency go up significantly apart from making writes very unreliable ( My write fails if it fails on any of the machines ).

If we have to allow writes succeeding when the write has been written on a majority of machines ( 2 out of 3, lets say ), to maintain consistency, its important that there is a master machine which keeps track of this information. This master machine can track which machines have a particular block in each shard. However, now if this master machine dies, our whole shard is unavailable till this machine comes back up. If this machine has a corrupted hard disk, then the unavailability becomes indefinite.

There are couple of ways to keep unavailability to minimum using a standby master keeping track of master node data through a shared file system(Explained in detail in the last hint).

4. Scalability Examples:A simple way to resolve this is to make sure we only have one machine per shard. Reads and writes would work exactly how they work with a single DB. However, if the machine holding the only copy dies and its hard disk becomes corrupt, we suddenly run into the risk of losing the data which is not acceptable. That means we definitely need more than one copy of data being written with us. Lets say that number is 3. Now, the issue is how do we maintain all of the copies in absolute sync ( Consistency, remember? ).

One naive way would be that a write would not succeed unless its written to all 3 copies / machines. That would make our write latency go up significantly apart from making writes very unreliable ( My write fails if it fails on any of the machines ).

If we have to allow writes succeeding when the write has been written on a majority of machines ( 2 out of 3, lets say ), to maintain consistency, its important that there is a master machine which keeps track of this information. This master machine can track which machines have a particular block in each shard. However, now if this master machine dies, our whole shard is unavailable till this machine comes back up. If this machine has a corrupted hard disk, then the unavailability becomes indefinite.

There are couple of ways to keep unavailability to minimum using a standby master keeping track of master node data through a shared file system(Explained in detail in the last hint).

http://highscalability.com/blog/2013/4/15/scaling-pinterest-from-0-to-10s-of-billions-of-page-views-a.html

http://www.rightscale.com/blog/enterprise-cloud-strategies/architecting-scalable-applications-cloud

http://highscalability.com/youtube-architecture

Useful Reads:

https://www.interviewbit.com/courses/system-design/topics/storage-scalability/#problems

http://coding-geek.com/how-databases-work/

http://www.tonido.com/blog/index.php/2013/12/05/scaling-your-databases/#.V5L837h95nI

https://en.wikipedia.org/wiki/Eventual_consistency

https://en.wikipedia.org/wiki/ACID

http://www.lecloud.net/tagged/scalability

Questions:http://www.lecloud.net/tagged/scalability

- Clones

- Using NoSQL instead of scaling a relational database

- Caching

- Being asynchronous

http://stackoverflow.com/questions/12215002/why-are-relational-databases-having-scalability-issues

No comments:

Post a Comment